RFP Evaluation Matrix Template

Learn how to build an RFP evaluation matrix for objective vendor proposal scoring. Includes weighting guide, scoring scales, and downloadable template.

SpecLens Team

Procurement & AI Experts

Subjective vendor evaluation creates problems—inconsistent decisions, bias, and difficulty defending choices. An RFP evaluation matrix transforms proposal assessment from gut feeling to structured analysis.

This comprehensive guide shows you how to design, weight, and use evaluation matrices for objective, defensible vendor selection.

Why Use an Evaluation Matrix

The Problem with Subjective Evaluation

Without structured evaluation:

| Problem | Consequence |

|---|---|

| Inconsistent criteria | Different standards applied to different vendors |

| Bias influence | Personal preferences override merit |

| Difficult justification | Can't explain why one vendor over another |

| Stakeholder conflict | Different opinions with no resolution method |

| Audit concerns | Subjective decisions invite scrutiny |

What Evaluation Matrices Provide

| Benefit | Description |

|---|---|

| Objectivity | Same criteria applied to all vendors |

| Transparency | Clear scoring rationale |

| Documentation | Written record of decision basis |

| Stakeholder alignment | Agree on criteria before evaluation |

| Defensibility | Objective basis if challenged |

| Better decisions | Systematic consideration of all factors |

When to Use Evaluation Matrices

| Procurement Type | Matrix Appropriate |

|---|---|

| Complex RFPs | Always |

| Significant purchases | Always |

| Multi-stakeholder decisions | Always |

| Simple RFQs (price-only) | Usually not needed |

| Commodity purchases | Usually not needed |

Matrix Structure

Evaluation Dimensions

Most evaluation matrices include these categories:

| Category | What It Evaluates |

|---|---|

| Technical capability | Solution fit, specifications, features |

| Price/cost | Initial cost, TCO, value |

| Vendor qualification | Experience, stability, references |

| Implementation | Timeline, approach, resources |

| Support/service | Ongoing support, service levels |

Category Breakdown

Technical Capability (typical 30-50% weight)

| Sub-criterion | Weight | What to Evaluate |

|---|---|---|

| Specification compliance | High | Meets mandatory requirements |

| Performance capability | High | Can deliver required performance |

| Feature completeness | Medium | Has needed/desired features |

| Technology currency | Medium | Modern, supported technology |

| Scalability | Medium | Can grow with needs |

| Integration capability | Medium | Works with existing systems |

Price/Cost (typical 25-40% weight)

| Sub-criterion | Weight | What to Evaluate |

|---|---|---|

| Initial price | High | Acquisition cost |

| Ongoing costs | High | Maintenance, support, consumables |

| Total cost of ownership | High | Full lifecycle cost |

| Pricing transparency | Medium | Clear, complete pricing |

| Value for money | Medium | Cost relative to capability |

Vendor Qualification (typical 15-25% weight)

| Sub-criterion | Weight | What to Evaluate |

|---|---|---|

| Relevant experience | High | Similar implementations |

| Financial stability | Medium | Ability to serve long-term |

| References | Medium | Customer satisfaction |

| Industry expertise | Medium | Domain knowledge |

| Company stability | Medium | Track record, trajectory |

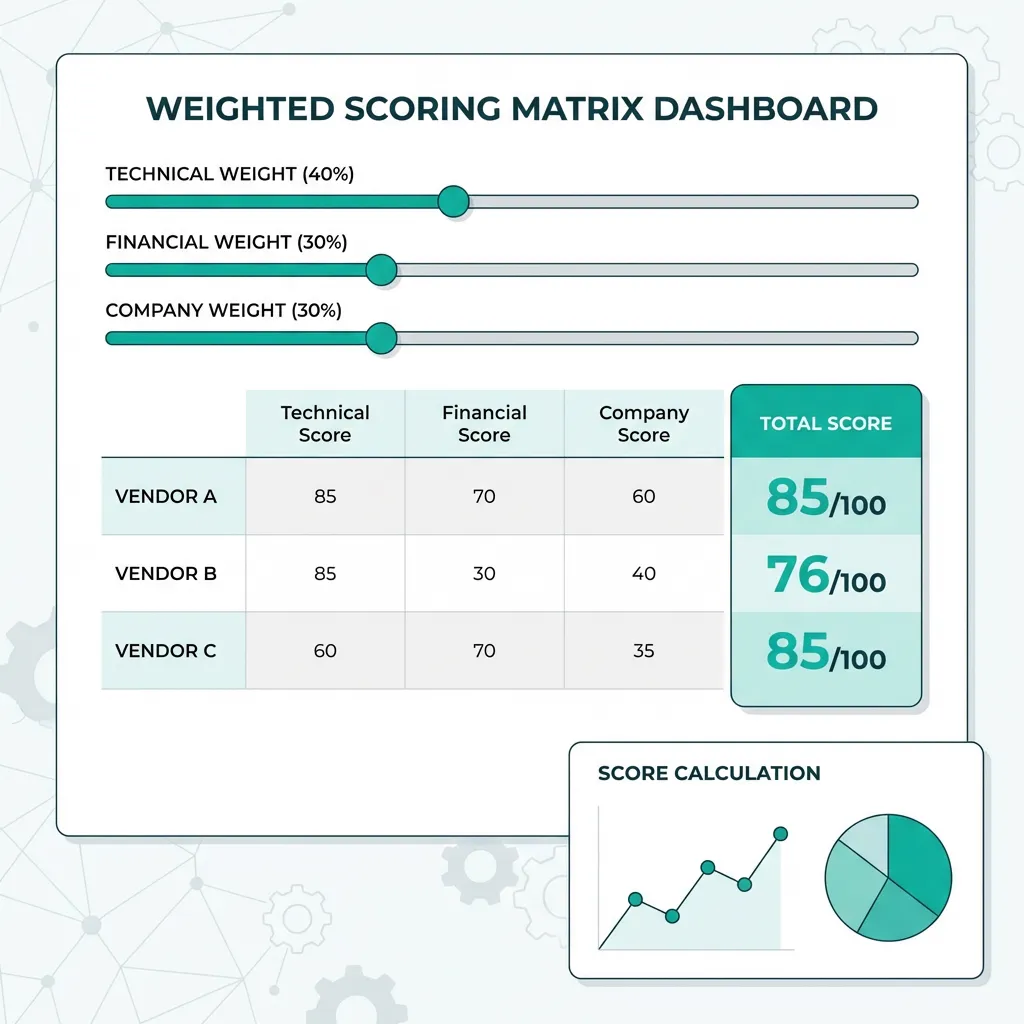

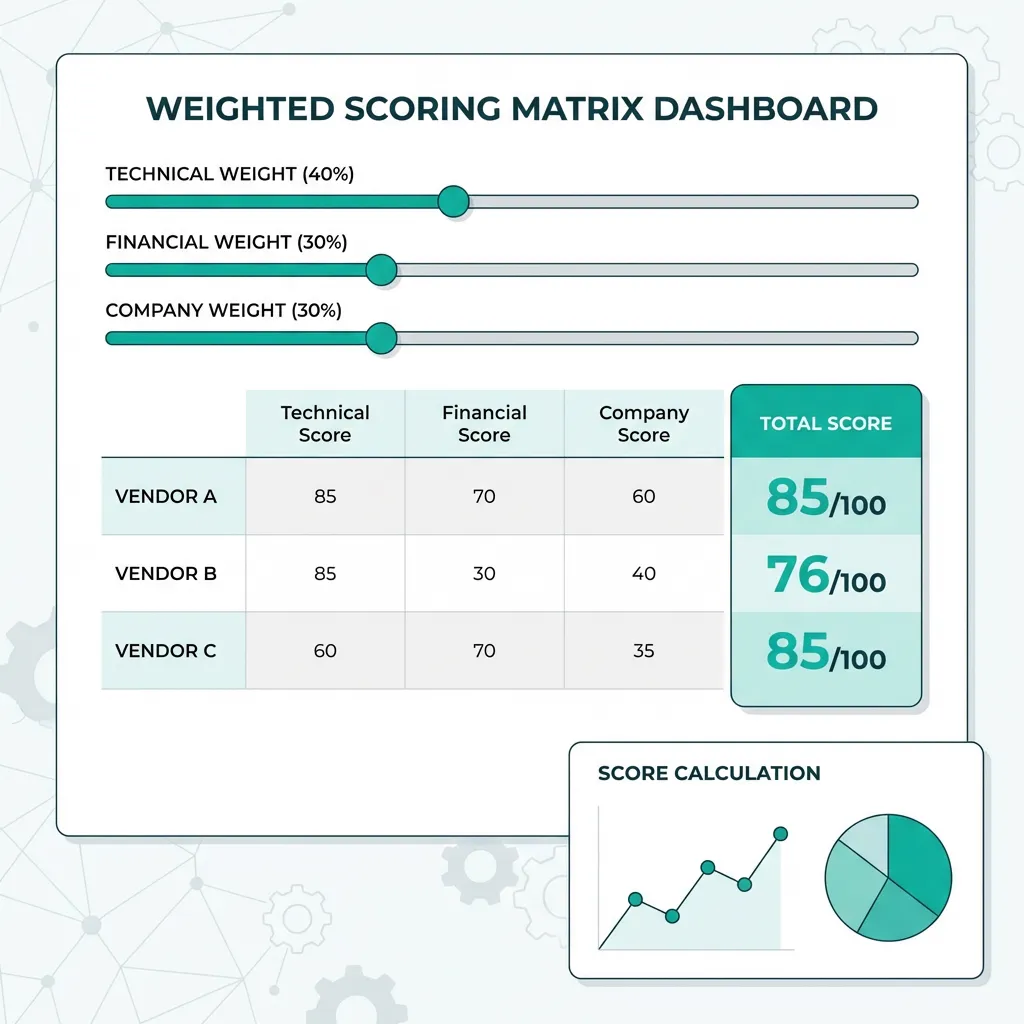

Weighting Guidelines

Determining Weights

Weight allocation depends on what matters most for your procurement:

| Priority Emphasis | Weight Adjustment |

|---|---|

| Cost-critical purchase | Increase price weight to 40%+ |

| Mission-critical system | Increase technical weight to 50%+ |

| Implementation-focused | Increase implementation weight |

| Long-term partnership | Increase vendor qualifications weight |

| Risk-averse environment | Increase vendor stability weight |

Weighting Methods

- Direct allocation: Assign percentage weights that sum to 100%. Simple and effective.

- Pairwise comparison (AHP): Compare each criterion against every other criterion for importance. Mathematical approach generates weights based on actual priorities.

- Point allocation: Give stakeholders 100 "currency points" to spend on the criteria they value most. Sum results to determine group weighting.

Weight Validation

Before finalizing weights, test with scenarios:

| Scenario | Test Question |

|---|---|

| Cheap but risky | Would low price outweigh vendor concerns? |

| Expensive but excellent | Would superior quality outweigh higher cost? |

| Feature gaps | How much do missing features matter? |

| Experience variance | How much does experience count? |

Adjust weights until outcomes match judgment.

Scoring Scales

5-Point Scale (Recommended)

| Score | Definition | Description |

|---|---|---|

| 5 | Exceptional | Exceeds requirements, best in class |

| 4 | Good | Fully meets requirements |

| 3 | Acceptable | Meets minimum requirements |

| 2 | Below | Partially meets, minor gaps |

| 1 | Poor | Significant gaps, fails requirements |

| 0 | Non-compliant | Does not meet mandatory requirement |

Technical Specification Compliance Scoring Example

| Score | Definition |

|---|---|

| 5 | Exceeds all specifications with meaningful advantages |

| 4 | Meets all specifications |

| 3 | Meets mandatory specifications with minor desirable gaps |

| 2 | Minor mandatory specification gaps |

| 1 | Significant specification gaps |

| 0 | Fails mandatory requirements |

Price Competitiveness Scoring Example

| Score | Definition |

|---|---|

| 5 | Lowest price and excellent value |

| 4 | Among lowest-priced with good value |

| 3 | Mid-range pricing with reasonable value |

| 2 | Above average pricing |

| 1 | Highest-priced without clear justification |

Managing Bias in Evaluation

Even with a matrix, unconscious bias creeps in. Here's how to fight it:

The "Halo Effect"

Bias: Scorer loves the vendor's sleek presentation, so they score the technical section higher than it deserves.

Mitigation: Score blindly (remove vendor names if possible) or score horizontally (score everyone on Question 1, then everyone on Question 2) rather than one vendor at a time.

Anchoring Bias

Bias: The first proposal read sets the "standard" for the rest.

Mitigation: Rotate the order. Have Evaluator A read Vendor 1 first, and Evaluator B read Vendor 3 first.

Confirmation Bias

Bias: Evaluator already prefers Vendor A and dismisses flaws while magnifying strengths.

Mitigation: Require written comments for all scores of 1 or 5. Force justification.

Pro Tip: Consider blind scoring—have an admin remove all vendor logos and names, labeling them "Vendor A," "Vendor B," etc. Only reveal identities after scores are locked.

Sample Evaluation Matrix

| Category | Weight | Vendor A | Vendor B | Vendor C |

|---|---|---|---|---|

| Technical | 40% | |||

| - Specification compliance | 20% | 4 (0.80) | 5 (1.00) | 3 (0.60) |

| - Features | 12% | 4 (0.48) | 4 (0.48) | 4 (0.48) |

| - Integration | 8% | 3 (0.24) | 5 (0.40) | 4 (0.32) |

| Price | 30% | |||

| - Total cost | 20% | 4 (0.80) | 3 (0.60) | 5 (1.00) |

| - Value | 10% | 4 (0.40) | 4 (0.40) | 4 (0.40) |

| Vendor | 20% | |||

| - Experience | 10% | 5 (0.50) | 4 (0.40) | 3 (0.30) |

| - References | 10% | 4 (0.40) | 4 (0.40) | 4 (0.40) |

| Support | 10% | |||

| - SLA | 6% | 4 (0.24) | 5 (0.30) | 3 (0.18) |

| - Resources | 4% | 4 (0.16) | 4 (0.16) | 4 (0.16) |

| TOTAL | 100% | 4.02 | 4.14 | 3.84 |

Result: Vendor B highest scoring; recommendation for selection.

Conducting the Evaluation Workshop

Don't just email spreadsheets and wait. Run a structured workshop.

1. Preparation

Distribute proposals and scoring sheets 3-5 days before the meeting. Evaluators must read and draft scores individually before the room convenes.

2. The Meeting (Calibration)

Go criterion by criterion. "For 'Technical Architecture', what did everyone give Vendor A?"

- Evaluator 1: "I gave a 4."

- Evaluator 2: "I gave a 4."

- Evaluator 3: "I gave a 1."

Stop! Discuss the outlier. Evaluator 3 might have found a security flaw the others missed. Or Evaluator 3 might have misunderstood the requirement. Debate facts, not feelings, until the group calibrates.

3. Finalization

Lock in the scores in the meeting. Do not let people "think about it" overnight, or external politics will change the results.

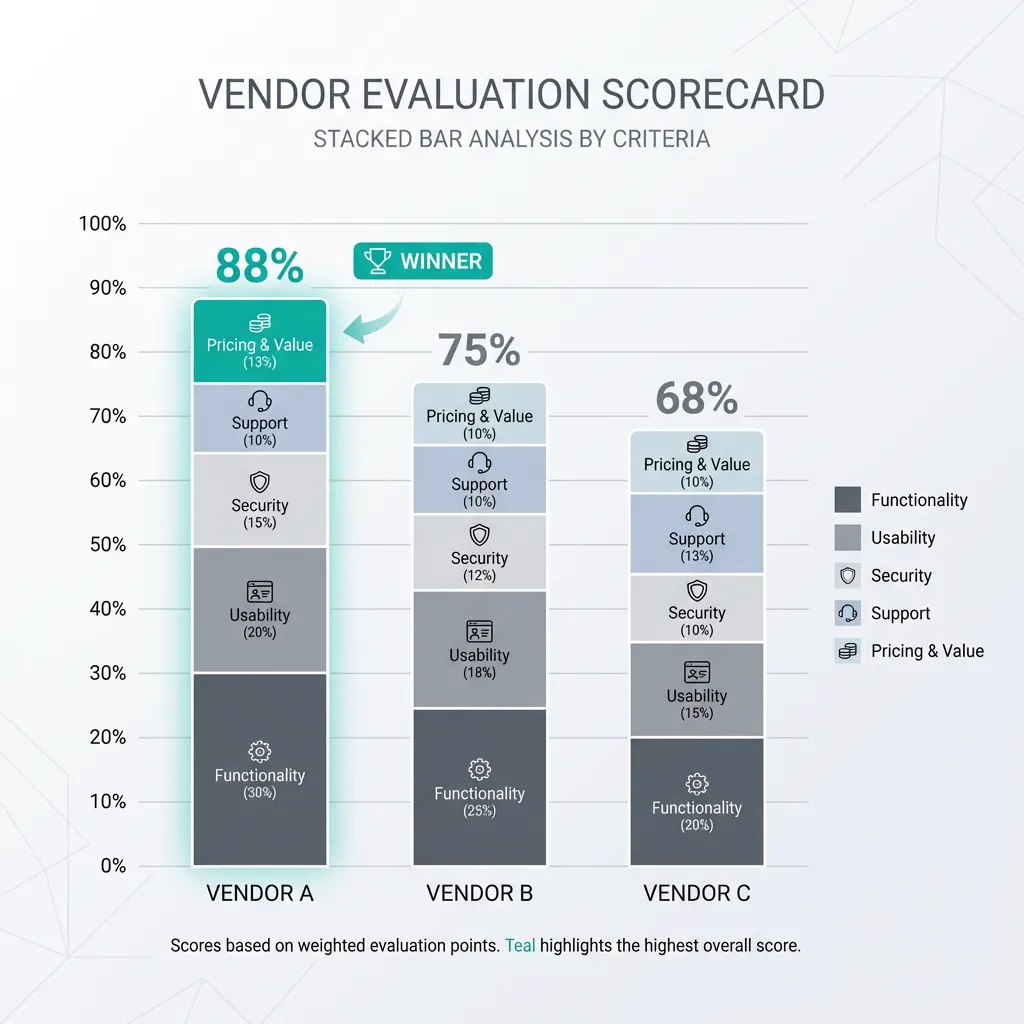

Presenting Results to Leadership

Executives don't want to see raw spreadsheets. They want the story.

Visualization Options

- The Radar Chart: Visualizes strengths/weaknesses. (e.g., Vendor A is lopsided—great Tech, bad Price. Vendor B is balanced.)

- The Executive Summary Table: Show only Category Level scores (Technical, Price, Experience, Total). Hide sub-criteria unless asked.

- The Risk/Value Graph: X-Axis: Price. Y-Axis: Technical Score. Plot vendors to show where they stand visually.

Handling Tie-Breakers

What if Vendor A and Vendor B are both 87.5/100?

- The "Price Shootout": If technical scores are essentially equal (within 2%), the cheapest option wins.

- The "Blue Sky" Session: Invite both for a final presentation. Give them a specific, unscripted problem to solve in the room.

- Reference Calls Deep Dive: Call references again with harder questions. "What is the one thing you hate about them?"

Consensus Meetings vs. Averaging Scores

The Problem with Averaging: Evaluator A gives a 9 (loves risks). Evaluator B gives a 1 (hates risks). Average = 5. The result looks "Average," but the reality is "Polarizing."

The Consensus Meeting: Bring Evaluator A and B into a room. "Why is this a 9? Why is it a 1?" Often, B saw a risk A missed. Or A saw an opportunity B missed. Goal: Re-score after discussion. The final score should reflect the group's best judgment, not the mathematical mean.

Frequently Asked Questions

How many evaluators should we have?

- Minimum: 3 for perspective diversity

- Typical: 5-7 for significant procurements

- Maximum: Keep manageable; more isn't always better

Can we change weights after seeing proposals?

Never. This undermines objectivity and invites legal challenge. Finalize weights before opening the first proposal.

How do we handle mandatory requirements?

Score as pass/fail. Failing a mandatory requirement typically disqualifies vendor regardless of other scores. Do not waste time scoring the rest of a proposal that failed a mandatory check.

What about price scoring methodology?

Common approaches:

- Point ratio: Lowest price = max points (5); others = (Lowest Price / This Price) × 5. Mathematically fair.

- Range-based: Define score ranges for price tiers.

- Relative: Best price = 5, worst = 1, others interpolated.

What if scoring is unanimous?

Great—document it as unanimous agreement. Still capture rationale for future reference.

Compare Vendor Specifications Objectively

SpecLens extracts specifications from vendor documents and creates side-by-side comparisons for objective evaluation matching your matrix criteria.

Compare Vendor Specs →Evaluate Objectively

A well-designed evaluation matrix transforms vendor selection from political exercise to evidence-based decision. Invest time in building your matrix—it pays back in better decisions and fewer challenges.

Tags:

Related Articles

How to Write an RFQ (Template)

Learn how to write an effective RFQ that gets accurate, comparable pricing. Includes RFQ template and best practices.

RFI vs RFQ vs RFP Explained

Learn the differences between RFI, RFQ, and RFP documents. Understand when to use each procurement request type for effective vendor engagement and selection.

RFP Compliance Checklist

Use this RFP compliance checklist to verify vendor proposals meet your requirements. Systematic verification for fair evaluation.

RFP Response Red Flags to Watch

Learn to spot RFP response red flags that indicate vendor problems. Identify warning signs to avoid bad vendor selections.